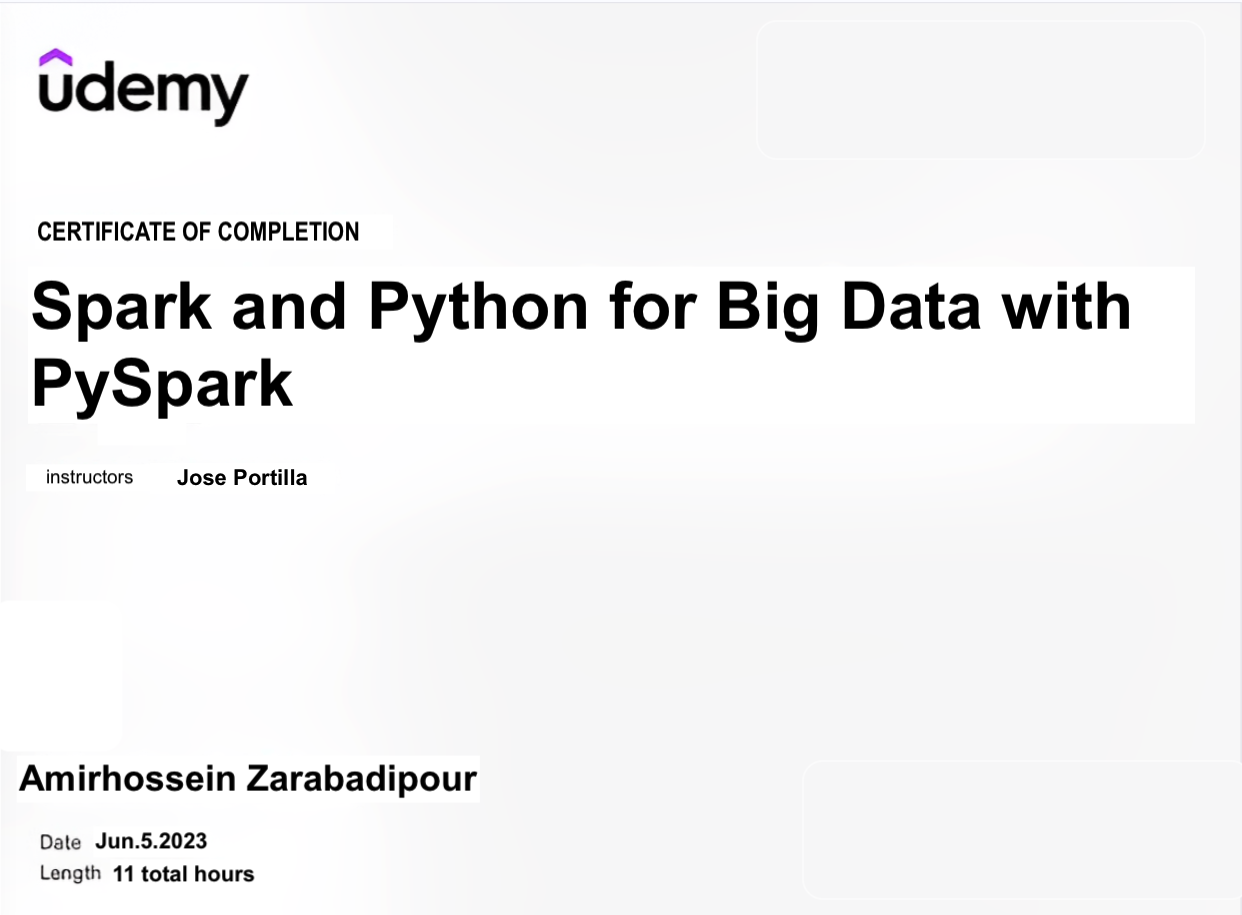

Spark and Python for Big Data with PySpark

This course provides comprehensive training in Spark DataFrames, machine learning, clustering, NLP, recommender systems, and Spark Streaming, enabling proficiency in big data processing and analysis using Spark and PySpark.

What I have Learned:

You can find course codes and materials by clicking on the following button:Introduction to Spark DataFrames: I learned the fundamentals of Spark DataFrames, which enabled me to work with structured and tabular data efficiently. Through various operations, transformations, and manipulation techniques, I acquired the skills to process and analyze data effectively.

Machine Learning with MLlib: I ventured into the field of machine learning with the MLlib library in Spark. Through practical examples and hands-on exercises, I gained a solid understanding of linear regression and logistic regression. I also learned evaluation techniques to ensure accurate model predictions.

Tree Methods: I acquired knowledge about decision tree-based algorithms, including decision trees and random forests. By understanding the underlying theory and exploring practical implementations using Spark's MLlib library, I gained proficiency in classification and regression tasks.

K-means Clustering: I explored the fascinating world of unsupervised learning through the K-means clustering algorithm. By studying the theory behind it and applying it to real-world datasets using Spark, I gained insights into how to group data based on patterns and interpret the results.

Introduction to Recommender Systems: I discovered the concepts and techniques behind recommender systems. These systems enable personalized suggestions based on user preferences. I obtained an overview of the building blocks and methodologies used to create effective recommendation engines.

Natural Language Processing (NLP): I dived into the exciting field of natural language processing using Spark. By learning about NLP tools, such as tokenization, stemming, and text classification, I gained the ability to perform advanced text analysis and gain valuable insights from textual data. The code-along project provided a hands-on experience in applying NLP techniques.

Introduction to Spark Streaming: I explored the world of real-time data processing through Spark Streaming. By understanding the basics of streaming data processing and learning to work with streaming data in Spark, I acquired the skills to handle and analyze data in real-time scenarios.

- © Untitled

- Design: HTML5 UP